Optical flow estimation is challenging and not well-defined in situations with transparent or occluded objects. In particular, optical flow can inherently only provide a single displacement vector for each pixel in the directly visible part of the reference frame. As a result, transparent objects and occlusion relationships cannot be represented accurately. In this work, we therefore turn to amodal perception to address these challenges at the task level. Amodal perception considers objects as a whole, and our proposed Amodal Optical Flow task follows this concept: Instead of predicting a single pixel-level displacement field for the whole image, we propose to predict a multi-layered pixel-level displacement field that encompasses both visible and occluded regions of the scene.

By enforcing occlusion ordering on the predicted layers, amodal optical flow encourages explicit reasoning about occlusions. This not only allows for a more comprehensive handling thereof, but also provides a better representation of the complex interactions between elements in dynamic scenes. Therefore, amodal optical flow is closer to the true 3D nature of the scene, which is particularly relevant for dynamic scene understanding in the context of robotic applications. Furthermore, the layered strategy and explicit occlusion reasoning also lend themselves more naturally to multi-frame sequence predictions. Object tracking is a concrete example that can benefit from amodal optical flow.

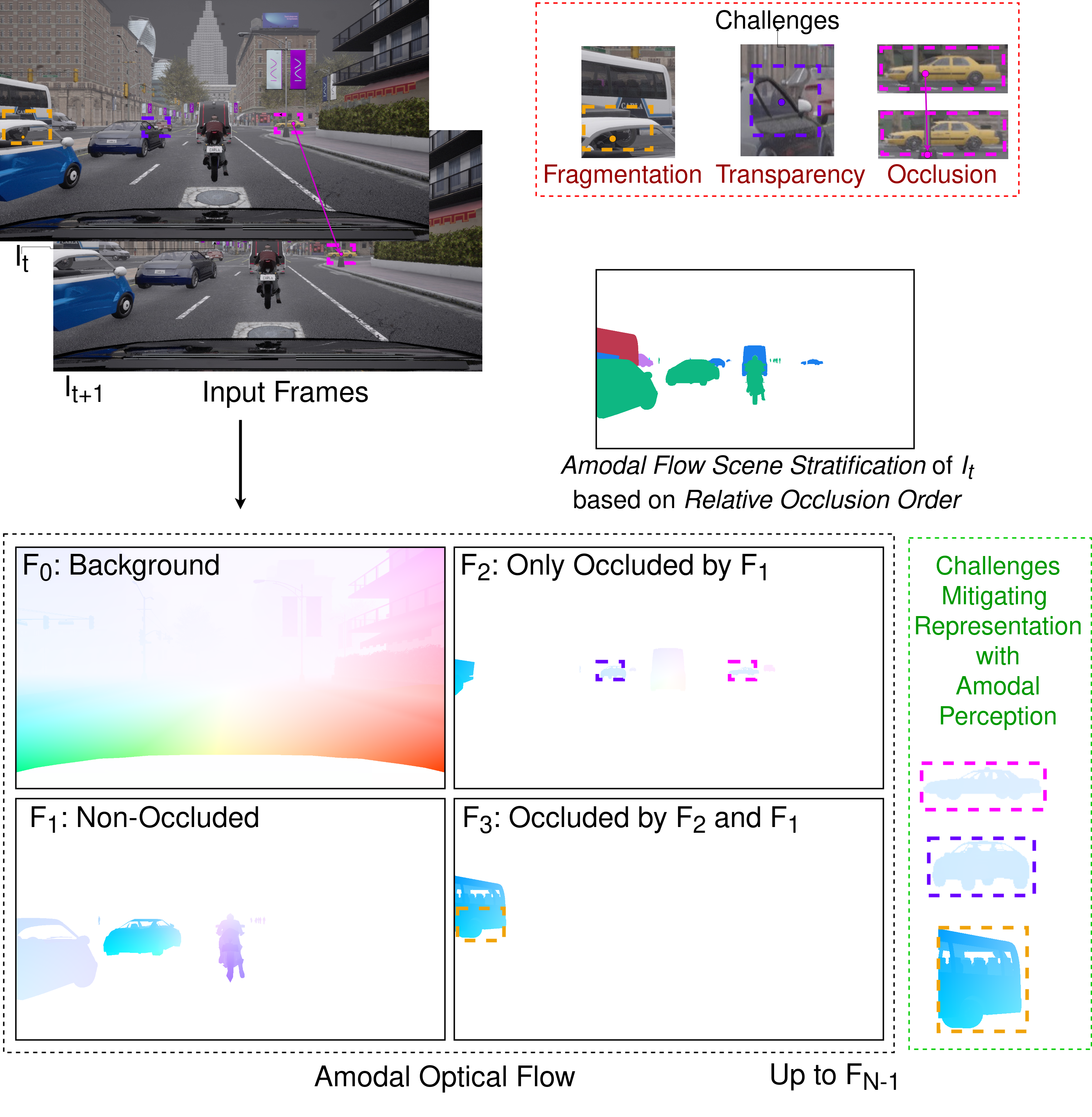

Figure: Overview of the amodal optical flow task. Amodal optical flow can represent transparent and partially occluded objects while also reducing the fragmentation of object segments through amodal (visible + occluded) motion representation of scene elements.

The general objective of amodal optical flow is to capture the motion of scene components in the image domain between consecutive frames \( I_t \) and \( I_{t+1} \), but in contrast to normal optical flow, we aim to do this for both visible and occluded regions. Inherently, this therefore requires estimating potentially multiple displacement vectors per pixel. We formulate this as estimating a set of motion fields \( \mathcal{F} = \{F_i\}_i\). For each overlapping object or region \( i \) the pixel position \( p \) in frame \( I_t \) is then mapped to its probable new position \( q_i \) in frame \( I_{t+1} \) via \( q_i = p + F_i (p) \).

To make the problem tractable for large dynamic scenes, each field \( F_i \) may contain multiple objects/regions; however, overlapping regions must always be placed on separate fields. We enforce these constraints and ensure that \( \mathcal{F} \) is well-defined and has a known order via a scene stratification strategy. In particular, we separate the scene into background and foreground, where the foreground category contains all objects of interest (e.g., traffic participants in the autonomous domain) and the background category is largely dominated by amorphous regions (including roads, walls, and sky) and other static objects (e.g., traffic signs, traffic lights, and trees) that are not classified as foreground.

With this, we define \( F_0 \) as the motion field for the background, where we do not handle overlaps and occlusions. \( F_1 \) to \( F_{N-1} \) capture the motion of potentially overlapping foreground objects. More specifically, we define \( F_1 \) to \( F_{N-1} \) to be in relative occlusion order: \( F_1 \) represents the motion of all unoccluded foreground objects, whereas \( F_{k+1} \) represents the motion of all objects that are occluded by objects on layers \( F_{k}, F_{k-1}, \ldots, F_{1} \) only. For example, \( F_{3} \) represents the motion of all foreground objects that are occluded by other objects on layers \( F_{2} \) and/or \( F_{1} \), but apart from that are unoccluded. Note that \( F_{i} \) is only defined in regions with at least \( i \) overlapping objects. In practice, we realize this by predicting separate assignment masks for each layer in addition to the displacement field.

Importantly, this strategy remains largely independent of the specific scene element classes and encourages motion estimation without heavy reliance on object recognition. For a more detailed description of the task as well as evaluation metrics, please refer to our paper.

To demonstrate the feasibility of amodal optical flow, we present AmodalFlowNet as a neural-network-based reference implementation. At its core, AmodalFlowNet consists of a hierarchical-recurrent amodal flow estimation module that supports predicting amodal flow for a theoretically unlimited number of overlapping objects without the need for re-training the model to change the number of amodal layers. It is thus capable of handling arbitrarily complex, dynamic scenes. To create the full AmodalFlowNet model, we embed our module into a pre-trained state-of-the-art standard optical flow estimation method, FlowFormer++.

Figure: Overview of our proposed amodal flow estimation module. Flow and corresponding amodal masks (yellow blocks) are estimated recurrently over both refinement steps (outer, blue arrow) and amodal layers (inner, green arrows). The decoder structure for the standard optical flow is retained from the baseline model. Additional semantic and mask predictions (red, green, and purple blocks) guide the network.

Our amodal flow estimation module (depicted above) builds on RAFT's recurrent update block. In particular, we employ three update blocks as flow decoder modules, taking the one from the baseline approach for standard flow estimation, adding another for the background flow, and lastly a third one shared across all amodal object layers to predict amodal flow. We exploit their hidden state \( h \) (depicted in gray) to facilitate hierarchical connections between the decoders. In each outer refinement iteration (blue arrow), we begin by estimating standard optical flow with the baseline decoder (estimation in each refinement iteration follows the green arrow). We then pass the updated hidden state \( h_{\mathrm{fg}}^{(t)} \) of that on to the background flow decoder, which also employs its own hidden state \( h_{\mathrm{bg}} \). Similarly, the amodal flow decoder takes in the updated hidden states of the standard and background decoders (\( h_{\mathrm{fg}}^{(t)} \) and \( h_{\mathrm{bg}}^{(t)} \)) and of the previous amodal layer \( h_{\mathrm{am},k-1}^{(t)} \) in addition to its own per-layer hidden state \( h_{\mathrm{am},k} \). Thus, information is propagated in a hierarchical and recurrent manner from unoccluded foreground objects to more occluded objects further in the background of the scene, enabling the occlusion-ordered prediction. To improve results, we split the amodal layer masks into visible (green) and occluded (purple) parts and predict those separately before predicting the full masks (yellow). Additional semantic decoders (output in red) are employed to further guide the network and improve its object reasoning capabilities.

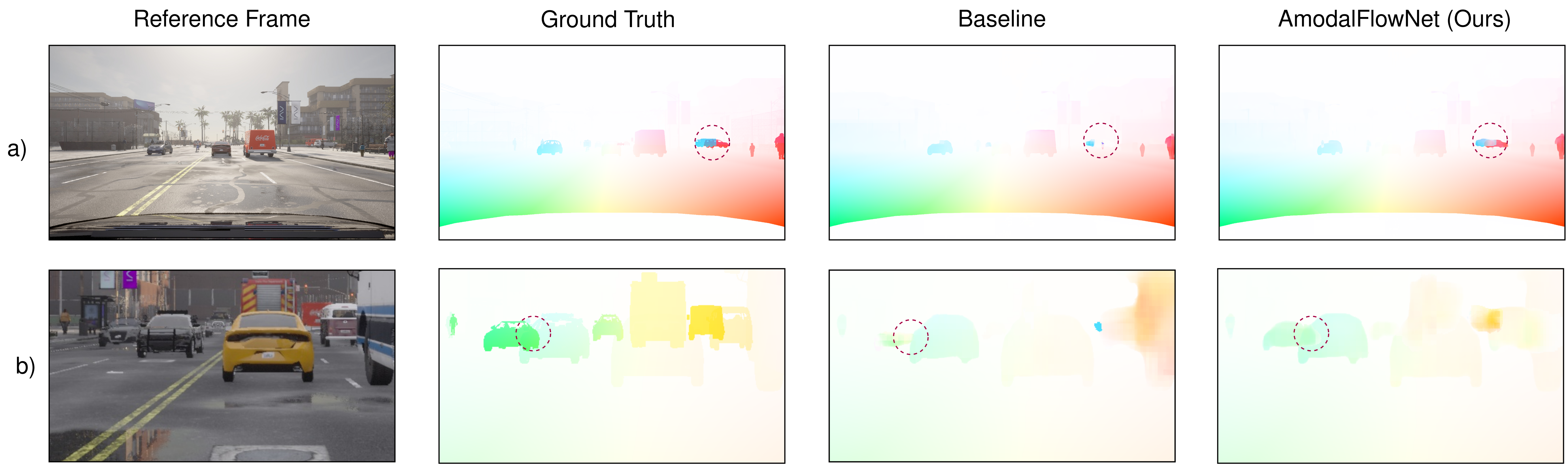

Figure: Qualitative comparison of our proposed AmodalFlowNet and a baseline without semantic and mask-based guidance. For visualization, we sequentially superimpose the multi-layer amodal optical flow predictions, in order of \(F_0, F_1, \ldots, F_{N-1}\).

We extend AmodalSynthDrive with ground truth for amodal optical flow. AmodalSynthDrive is a synthetic multi-modal dataset for amodal perception in the automotive domain, consisting of 150 driving sequences with a total of 60,000 multi-view images, 3D bounding boxes, LiDAR data, odometry, and amodal semantic/instance/panoptic segmentation annotations. Furthermore, it spans a wide variety of different scenarios involving diverse traffic, weather, and lighting conditions.

We make ground-truth data for amodal optical flow available at amodalsynthdrive.cs.uni-freiburg.de.

A software implementation of this project based on PyTorch, including trained models, can be found in our GitHub repository. The code is licensed under the Apache 2.0 license for academic use. For any commercial purpose, please contact the authors.

This work was funded by the German Research Foundation Emmy Noether Program grant number 468878300 and an academic grant from NVIDIA.